Prompt Flow offers a comprehensive suite of development tools tailored for LLM-based AI applications. It simplifies the entire development process, from initial ideation to production deployment, ensuring efficient prompt engineering and top-tier application quality. Dive into the world of Prompt Flow and discover how it integrates LLMs, prompts, Python code, and more into a cohesive, executable workflow.

Prompt Flow: Streamlining AI Application Development

Prompt Flow is an innovative suite of development tools introduced by Microsoft, aiming to simplify the entire development process of LLM-based AI applications. From the initial ideation phase to prototyping, testing, evaluation, and finally, production deployment and monitoring, Prompt Flow is designed to make prompt engineering more straightforward and efficient.

Key Features of Prompt Flow:

- Executable Workflows: It allows developers to create flows that integrate LLMs, prompts, Python code, and other tools into a cohesive, executable workflow.

- Debugging and Iteration: Developers can easily debug and iterate their flows, especially when interacting with LLMs.

- Evaluation: With Prompt Flow, you can evaluate your flows and compute quality and performance metrics using extensive datasets.

- Integration with CI/CD: It seamlessly integrates testing and evaluation into your CI/CD system, ensuring the optimal quality of your flow.

- Deployment Flexibility: You can deploy your flows to your preferred serving platform or incorporate them directly into your app’s codebase.

- Collaboration: An optional but highly recommended feature is the ability to collaborate with your team using the cloud version of Prompt Flow in Azure AI.

Microsoft encourages the community to contribute to making Prompt Flow even better. They welcome discussions, issue reporting, and PR submissions on https://github.com/microsoft/promptflow

Prompt Flow Tutorials: This section offers a collection of flow samples and tutorials. Here are some of the key tutorials and samples:

-

Getting Started with Prompt Flow: A step-by-step guide to initiate your first flow run. Link to Tutorial

-

Chat with PDF: A comprehensive tutorial on constructing a high-quality chat application with Prompt Flow. This includes flow development and evaluation using metrics. There’s also a section on how to use the Prompt Flow Python SDK to test, evaluate, and experiment with the “Chat with PDF” flow. Link to Tutorial

-

Connection Management: Manage various types of connections using both SDK and CLI. Link to Tutorial

-

Run Prompt Flow in Azure AI: A quick start guide to execute a flow in Azure AI and evaluate it. Link to Tutorial

-

Samples:

Standard Flow:

- Basic: This is a fundamental flow that utilizes a prompt and a Python tool.

- Basic with Connection: This flow demonstrates the use of a custom connection combined with a prompt and a Python tool.

- Basic with Built-in LLM: This is a basic flow that employs the built-in LLM tool.

- Customer Intent Extraction: This flow is crafted from pre-existing Langchain Python code.

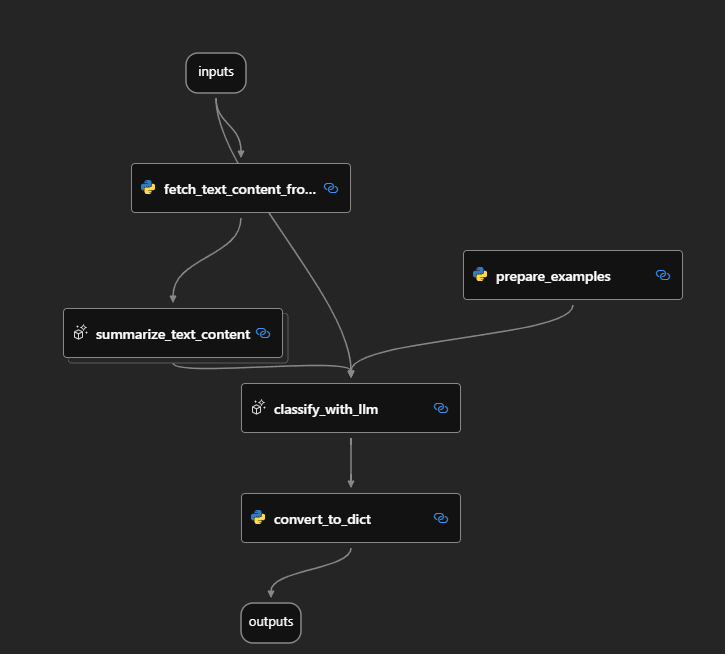

- Web Classification: This flow showcases multi-class classification with LLM. When provided with a URL, it classifies the URL into a specific web category using just a few shots, simple summarization, and classification prompts.

- Autonomous Agent: This flow highlights the construction of an AutoGPT flow. The flow autonomously determines how to apply given functions to achieve its goal, which in this case is film trivia. It provides accurate and up-to-date details about movies, directors, actors, and more.

Chat Flow:

- Chat with Wikipedia: This flow demonstrates a Q&A session with GPT3.5, leveraging information from Wikipedia to ground the answers.

- Chat with PDF: This flow allows users to pose questions about the content of a PDF file and receive answers.

Evaluation Flow:

- Eval Classification Accuracy: This flow illustrates the process of evaluating the performance of a classification system.

These samples provide a comprehensive overview of the capabilities of Prompt Flow, showcasing various use cases and functionalities.

For more examples, you can try out more Prompt Flow examples.

The cloud version of Prompt Flow in Azure AI has the potential to revolutionize team collaboration in AI application development in several ways:

-

Centralized Workspace: With cloud-based tools, teams can work in a centralized environment, ensuring that everyone has access to the same resources, data, and tools. This eliminates the inconsistencies that might arise from using different local setups.

-

Real-time Collaboration: Much like how tools like Google Docs allow multiple users to work on a document simultaneously, cloud-based AI development platforms can enable developers to collaborate on models, data pipelines, and other aspects of AI development in real-time.

-

Version Control and Tracking: Cloud platforms often come with built-in version control, allowing teams to track changes, revert to previous versions, and understand the evolution of a project. This is crucial for AI development, where small changes can have significant impacts.

-

Scalability: One of the significant advantages of cloud platforms is the ability to scale resources as needed. Teams can quickly scale up computing resources during intensive tasks like training large models and scale down during periods of inactivity, leading to cost savings and efficiency.

-

Integrated Tools and Services: Azure AI, like other cloud platforms, offers a suite of integrated tools and services. This means that teams can access data storage, machine learning services, analytics tools, and more, all from a single platform, streamlining the development process.

-

Enhanced Security: Cloud platforms invest heavily in security, ensuring that data, models, and other assets are protected. This is especially important for AI development, where proprietary models and datasets need to be safeguarded.

-

Accessibility: Being cloud-based means that team members can access the platform from anywhere, at any time, as long as they have an internet connection. This facilitates remote work and collaboration across different geographies.

-

Continuous Integration and Deployment (CI/CD): Cloud platforms like Azure AI support CI/CD pipelines, allowing for automated testing, integration, and deployment of AI models. This ensures that teams can quickly iterate and deploy models, enhancing productivity.

-

Shared Knowledge Base: With everything stored in the cloud, teams can create a shared knowledge base, including documentation, best practices, and learnings from previous projects. This can be invaluable for onboarding new team members or referencing past work.

-

Cost Efficiency: By leveraging cloud resources, teams can avoid the upfront costs of purchasing and maintaining physical hardware. They can utilize pay-as-you-go models, ensuring they only pay for the resources they use.

The cloud version of Prompt Flow in Azure AI can transform team collaboration by providing a centralized, scalable, and integrated platform that enhances productivity, efficiency, and innovation in AI application development.

Prompt Flow’s introduction has significantly redefined the approach to prompt engineering in LLM (Language Model)-based AI applications in several key ways:

Unified Development Environment: Prompt Flow offers a comprehensive suite of tools that streamline the entire development cycle of LLM-based AI applications. This unified environment means that developers no longer need to juggle multiple tools or platforms, leading to a more efficient and cohesive development process.

Executable Workflows: One of the standout features of Prompt Flow is its ability to create flows that integrate LLMs, prompts, Python code, and other tools into a cohesive, executable workflow. This structured approach ensures that different components of an AI application can interact seamlessly.

Iterative Debugging: Prompt Flow facilitates the debugging and iteration of flows, especially when interacting with LLMs. This means that developers can quickly identify and rectify issues, leading to more robust and reliable AI applications.

Evaluation Metrics: With Prompt Flow, developers can evaluate their flows using extensive datasets, computing quality and performance metrics. This data-driven approach ensures that AI applications are not only functional but also optimized for performance.

Integration with CI/CD: Prompt Flow’s ability to integrate testing and evaluation into CI/CD systems means that AI applications can be continuously tested, integrated, and deployed. This continuous development approach ensures that applications are always up-to-date and of the highest quality.

Deployment Flexibility: Developers have the freedom to deploy their flows to their preferred serving platform or integrate them directly into their app’s codebase. This flexibility ensures that AI applications can be deployed in a manner that best suits the specific needs and infrastructure of a project.

Prompt Variability: Prompt Flow introduces features that allow developers to tune prompts using variants. This means that developers can experiment with different prompt structures and phrasings to determine which ones yield the best results, leading to more effective LLM interactions.

Collaborative Features: With features that support collaboration, such as the cloud version of Prompt Flow in Azure AI, teams can work together more effectively, sharing resources, data, and insights.

Extensive Documentation: Prompt Flow provides comprehensive guides, tutorials, and references for its SDK, CLI, and extensions. This wealth of information ensures that developers have all the resources they need to effectively leverage the platform.

Open for Community Contribution: Microsoft’s approach to making Prompt Flow open for discussions, issue reporting, and PR submissions means that the platform can benefit from the collective expertise and insights of the broader developer community.

Prompt Flow has transformed prompt engineering by providing a unified, data-driven, and collaborative platform that streamlines the development, testing, and deployment of LLM-based AI applications.

The seamless integration of testing and evaluation into CI/CD (Continuous Integration/Continuous Deployment) systems plays a pivotal role in enhancing the quality assurance (QA) process for AI applications. Here’s how:

Continuous Feedback Loop: Integrating testing and evaluation into CI/CD provides developers with immediate feedback on their code changes. This continuous feedback ensures that any issues or regressions are identified and addressed promptly, reducing the chances of faulty code making it to production.

Automated Testing: CI/CD systems can automatically run a suite of tests every time there’s a code change. For AI applications, this can include unit tests, integration tests, and even performance tests on the models. Automated testing ensures consistent quality checks without manual intervention.

Reproducibility: CI/CD systems ensure that tests are run in a consistent environment. This reproducibility is crucial for AI, where slight changes in data or environment can lead to different outcomes. A consistent testing environment ensures that results are reliable and comparable over time.

Early Detection of Issues: By continuously integrating and testing, issues are detected early in the development cycle. Early detection is more cost-effective as it’s generally easier and cheaper to fix issues earlier rather than later in the development process.

Model Validation: For AI applications, it’s crucial to validate the performance of models regularly. Integrating model evaluation into CI/CD means that every time a model is updated or trained with new data, its performance metrics are evaluated and compared against benchmarks, ensuring model quality.

Data Drift Monitoring: AI models can be sensitive to changes in input data. By integrating data validation checks into CI/CD, teams can monitor for data drift and ensure that models are still performing as expected when faced with new or evolving data.

Streamlined Deployment: Once code and models pass all tests and evaluations, they can be automatically deployed to production or staging environments. This streamlined deployment ensures that high-quality updates reach users faster.

Rollbacks: If issues are detected post-deployment, CI/CD systems allow for quick rollbacks to previous stable versions, minimizing potential disruptions or damages.

Collaboration and Transparency: Integrating testing and evaluation into CI/CD promotes a culture of collaboration and transparency. Teams can see the status of tests, understand failures, and work together to address them, fostering a collective responsibility for quality.

Documentation and Traceability: CI/CD systems often come with tools that document the testing and deployment process. This documentation provides a clear traceability of changes, tests conducted, and evaluation results, which is crucial for understanding the evolution of an AI application and for compliance purposes.

The integration of testing and evaluation into CI/CD systems provides a structured, automated, and consistent approach to quality assurance. For AI applications, where the quality of models and data is paramount, this integration ensures that applications are robust, reliable, and meet the desired performance benchmarks.

Deploying Flows with Prompt Flow

Deploy a Flow: With Prompt Flow, users have the flexibility to deploy their flows across various platforms. Whether you’re looking to deploy locally on a development server, use containerization with Docker, or scale with Kubernetes, Prompt Flow has got you covered.

Deployment Options:

- Development Server: Ideal for testing and iterative development. Read here.

- Docker: Containerize your flow for consistent and scalable deployments. Read here

- Kubernetes: Deploy at scale, managing your flows across clusters. Read here

For those looking to leverage cloud platforms, Prompt Flow also provides guides for deploying on Azure App Service. And the good news doesn’t stop there! The team is actively working on expanding official deployment guides for other hosting providers. They also encourage and welcome user-submitted guides to enrich the community’s resources.

Note: Always ensure that you’re following best practices and security protocols when deploying, especially in production environments.

Harnessing the Power of the Cloud with Prompt Flow

Cloud Integration: With Prompt Flow, the transition from local development to a cloud environment is seamless. Developers can craft their flows locally and then effortlessly migrate the experience to Azure Cloud, leveraging the vast resources and scalability that cloud platforms offer.

Key Features:

- AzureAI Integration: Dive deep into running Prompt Flow directly within Azure AI, making the most of Microsoft’s AI capabilities.

- Deployment on Azure App Service: Looking for a reliable cloud service to host your flows? Deploy directly to Azure App Service and ensure your applications are accessible, scalable, and secure.

For those keen on exploring how to run Prompt Flow in Azure AI, the next guide provides a comprehensive walkthrough.

Note: As you transition to the cloud, always prioritize security and compliance, ensuring that your applications adhere to best practices and industry standards.

Advantages of using Azure AI over local execution:

-

Enhanced Team Collaboration: Azure AI’s Portal UI is optimized for sharing and presenting your flows and runs, making it superior for collaborative efforts. Additionally, the workspace feature efficiently organizes shared team resources, such as connections.

-

Enterprise-Grade Infrastructure: Prompt Flow taps into Azure AI’s strong enterprise capabilities, offering a secure, scalable, and dependable platform for flow development, testing, and deployment.

The documentation site located on the below links, provides comprehensive guides for the Prompt Flow SDK, CLI, and the VSCode extension.

Quick Links:

For more detailed information, you can explore

- Prompt Flow in Azure AI.

- RAG from cloud to local - bring your own data QnA (preview)

- 15 Tips to Become a Better Prompt Engineer for Generative AI

- Exploring Azure AI Content Safety: Navigating Safer Digital Experience

- Azure AI Content Safety Studio - QuickStart

- How we interact with information: The new era of search

- To Fine Tune or Not Fine Tune? That is the question

- How vector search and semantic ranking improve your GPT prompts

- An Introduction to LLMOps: Operationalizing and Managing Large Language Models using Azure ML

Comments