Microsoft

Microsoft Azure AI Services is a suite of cloud-based AI services that can be used to build and deploy AI applications. The services include various tools for natural language processing, computer vision, machine learning, and more.

Azure OpenAI Service is a specific service within Azure AI Services that provides access to OpenAI’s large language models. These models can be used for various tasks, such as text generation, translation, summarization, and question-answering.

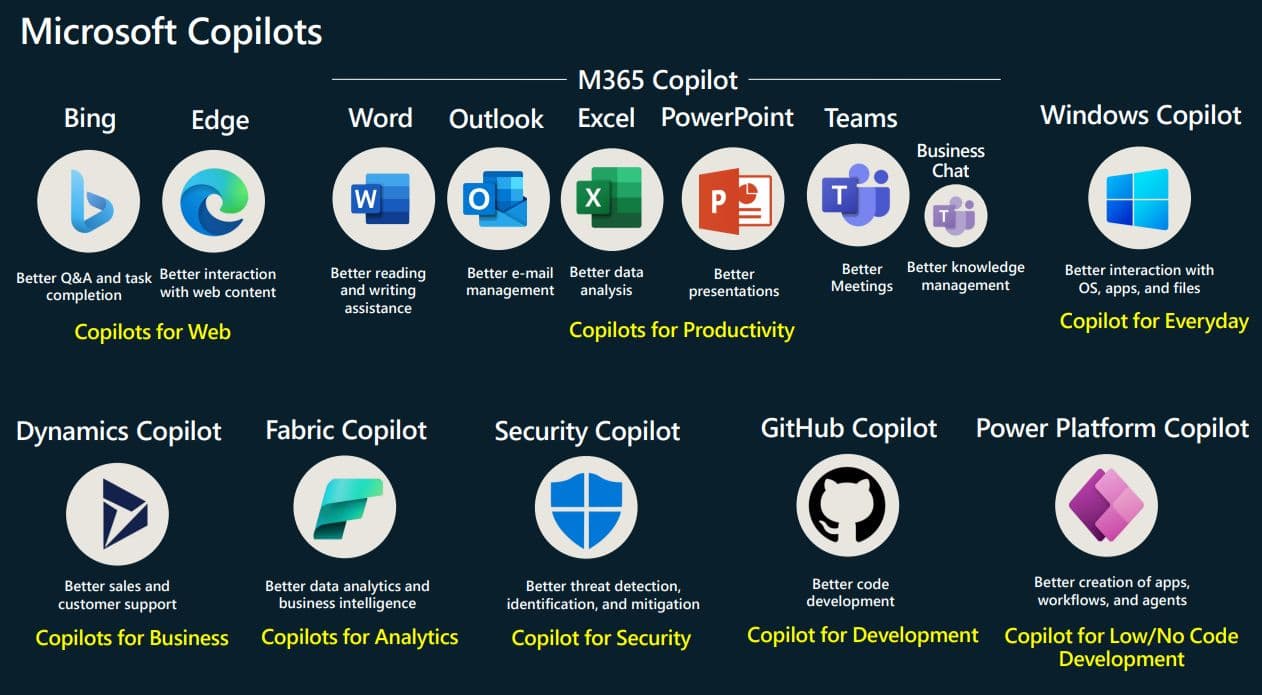

Various copilots that use Azure AI Services and Azure OpenAI Service are under development. These copilots are designed to help developers with coding, writing, and researching tasks. For example, GitHub Copilot is a copilot that uses OpenAI’s GPT-3 language model to suggest code completions.

Azure AI Services, Azure OpenAI Service, and various copilots are all part of the growing field of artificial intelligence. These tools make it easier for developers to build and deploy AI applications and open up new possibilities for AI in the enterprise and beyond.

Here are some specific examples of how Azure AI Services, Azure OpenAI Service, and various copilots can be used:

Azure AI Services can be used to build a chatbot that can answer customer questions, generate marketing copy, or even write code. Azure OpenAI Service can be used to create a new type of content generation tool that is more creative and informative than traditional content generation tools. Various copilots can help developers with coding, writing, and researching tasks.

Read more on Microsoft 365 Copilot, Windows Copilot, Copilot for Web in Bing Chat Enterprise

These are just a few examples of how Azure AI Services, Azure OpenAI Service, and various copilots can be used. As these tools develop, they will become even more powerful and versatile.

- Text-to-Text (T2T): Microsoft Translator offers real-time translation and transcription services. Microsoft Translator

- Text-to-Audio (T2A): Azure Cognitive Services Text to Speech converts text into natural-sounding speech. Azure Text to Speech

- Audio-to-Text (A2T): Azure Speech to Text transcribes audio-to-text with customization options. Azure Speech to Text

A few examples from Bing are Bing Chat and Image Creator, powered by OpenAI models.

Azure Cognitive Service

Dive into the fascinating world of Azure Cognitive Service for Language and discover how Microsoft Azure is revolutionizing the realm of Natural Language Processing (NLP) and text analytics. From sentiment analysis and named entity recognition to custom text classification and abstractive text summarization, this article unpacks the myriad capabilities of Azure Cognitive Service. Whether you’re a business aiming to extract insights from customer feedback or a researcher navigating vast textual data, this piece offers invaluable insights into the tools that can amplify your understanding of textual data. Don’t miss out on this deep dive into the transformative power of Azure’s language processing tools!”

Exploring capabilities of Azure Cognitive Service

Prompt Flow

Unveil the future of AI development, Microsoft’s Prompt Flow, Dive deep into a groundbreaking suite of tools tailored for LLM-based AI applications, streamlining everything from ideation to deployment. Whether you’re an AI enthusiast or a seasoned developer, this article offers a treasure trove of insights, from executable workflows and iterative debugging to cloud integration with Azure AI. Discover how Prompt Flow is revolutionizing prompt engineering, making AI application development more cohesive, efficient, and powerful than ever. Don’t miss out on this comprehensive guide to harnessing the full potential of Prompt Flow!”

Revolutionizing AI Development with Prompt Flow

Semantic Kernel

A semantic kernel is a concept primarily used in the field of information retrieval, search engine optimization (SEO), and computational linguistics. It refers to a set of keywords and phrases that represent the core meaning or essence of a text, topic, or concept. The idea is to capture the most relevant and essential terms that define a particular subject or context. Here’s a more detailed explanation:

-

Core Concept: At the heart of the semantic kernel is the idea that every topic or subject has a set of words and phrases that are most representative of its meaning. These words and phrases form the “kernel” or core of the topic’s semantic representation.

-

Information Retrieval: In the context of search engines and information retrieval, a semantic kernel can help in understanding the main topics of a document or a set of documents. By identifying the semantic kernel, search engines can better match user queries to relevant documents.

-

SEO: For search engine optimization, understanding the semantic kernel of a topic can help content creators ensure that their content is comprehensive and covers all relevant subtopics. By incorporating the terms from the semantic kernel, the content can be more likely to rank for a broader set of related queries.

-

Computational Linguistics: In computational linguistics, the concept of a semantic kernel can be used in tasks like topic modeling, where the goal is to identify the main topics in a large collection of texts. The semantic kernel can provide insights into the main themes and subjects being discussed in the texts.

-

Building the Semantic Kernel: To build a semantic kernel for a particular topic, one might start with a primary keyword or phrase and then use various tools and techniques to identify related terms, synonyms, and phrases. This could involve keyword research tools, studying top-ranking content for the topic, and using natural language processing (NLP) techniques.

-

Benefits:

- Precision: By focusing on the core terms that define a topic, the semantic kernel ensures that the most relevant information is captured.

- Relevance: In SEO and information retrieval, using a semantic kernel can improve the relevance of search results, leading to better user satisfaction.

- Comprehensiveness: For content creators, understanding the semantic kernel ensures that all facets of a topic are covered in their content.

In summary, a semantic kernel is a set of keywords and phrases that capture the essence of a topic. It’s a valuable concept in various fields, from SEO to computational linguistics, helping to improve the precision, relevance, and comprehensiveness of information.

Microsoft has released Semantic Kernel as an open-source project on GitHub, available in .NET and Python, with Typescript and Java versions coming soon.

Semantic Kernel is an open-source SDK developed by Microsoft that allows developers to seamlessly integrate AI services, such as OpenAI, Azure OpenAI, and Hugging Face, with conventional programming languages like C# and Python. This integration enables the creation of AI applications that harness the strengths of both AI and traditional programming.

Microsoft’s documentation for the open-source SDK named Semantic Kernel is located here and https://aka.ms/semantic-kernel

Microsoft’s Copilot system, which is powered by a combination of AI models and plugins, uses Semantic Kernel as its central AI orchestration layer. This layer facilitates the combination of various AI models and plugins to provide novel user experiences. Developers can use Semantic Kernel to replicate the AI orchestration patterns seen in Microsoft 365 Copilot and Bing.

Semantic Kernel is designed to be highly extensible. It offers connectors that allow developers to integrate AI services into their existing applications. These connectors enable the addition of “memories” and models, essentially giving the application a simulated “brain”. Additionally, AI plugins can be added to the system, acting as the “body” of the AI application, allowing it to interact with the real world.

With Semantic Kernel, developers can create sophisticated AI pipelines that automate complex tasks. For instance, it can be used to automate the process of sending an email by retrieving relevant information, planning the steps using available plugins, and then sending the email.

While developers can directly use APIs of popular AI services, it requires learning and integrating each service’s API into their applications. Direct API usage also doesn’t leverage recent AI advancements that offer solutions on top of these services. Semantic Kernel simplifies this process, making it easier for developers to integrate AI into their apps.

Key Components of Semantic Kernel:

- Ask: The process begins with a goal sent to Semantic Kernel by a user or developer.

- Kernel: Orchestrates the user’s request by running a defined pipeline. It provides a common context for data sharing between functions.

- Memories: Using specialized plugins, developers can recall and store context in vector databases, simulating memory in AI apps.

- Planner: Allows Semantic Kernel to auto-create chains to address user needs by combining existing plugins.

- Connectors: Used to fetch additional data or perform actions using plugins like the Microsoft Graph Connector kit.

- Custom Plugins: Developers can create custom plugins that run inside Semantic Kernel, either as LLM prompts (semantic functions) or native C# or Python code.

- Response: Once the kernel completes its tasks, a response is sent back to the user.

Semantic Kernel is Microsoft’s solution to the challenges of integrating AI into traditional applications. It offers a flexible and extensible platform for developers to harness the power of AI in their apps, without the need to delve deep into the intricacies of individual AI service APIs.

Iterative Self-Refinement with GPT-4V(ision) for Automatic Image Design and Generation

Idea2Img on Microsoft Azure AI

“Idea2Img” is an innovative platform that leverages the power of artificial intelligence to transform textual descriptions into corresponding visual images. This tool, based on OpenAI’s DALL·E, allows users to input a textual description, and the system then generates an image that matches the given description. The platform showcases the advancements in AI, particularly in the realm of generative models, by producing images that are often surprisingly accurate and detailed based on the textual prompts provided. Whether you’re looking to visualize a complex concept, a fictional character, or just experiment with the capabilities of AI in image generation, “Idea2Img” offers a glimpse into the future of AI-driven content creation.

The research paper titled “CLIPDraw: Exploring Text-to-Drawing Synthesis through Language-Image Pre-training” presents a novel approach to synthesizing drawings directly from textual descriptions using a model called CLIPDraw. This model leverages the capabilities of CLIP, a vision-language neural network, to generate drawings that visually represent the given textual prompts. Unlike traditional methods that rely on intermediate representations or specific datasets, CLIPDraw harnesses the power of CLIP’s pre-trained knowledge, enabling it to produce a wide variety of drawings spanning different domains. The results demonstrate the model’s ability to generate coherent and contextually relevant drawings, highlighting the potential of language-image pre-training in the realm of text-to-drawing synthesis. This research paves the way for further exploration into the intersection of natural language processing and computer graphics, with potential applications in design, education, and entertainment.

OpenAI API, Azure OpenAI Service, PaLM API, Google Cloud Vertex AI, and Meta LLaMA-2

Getting started

Text Generation

To get started on Text Generation in OpenAI, you can check out how to do this in OpenAI Python SDK, LangChain, and Semantic Kernel, and it covers using Azure OpenAI Service or OpenAI API.

- Text Completion covers text generation via OpenAI Python SDK

- Text Completion via LangChain covers OpenAI, Azure OpenAI Service

- Text Completion via Semantic Kernel covers OpenAI and Azure OpenAI Service

Image Generation

To get started on Image Generation in OpenAI, you can check out how to do this in OpenAI Python SDK.

Text-to-Image

- Text to Image via OpenAI Python SDK covers DALL-E 2

Image-to-Image or Image variations

- Image to Image via OpenAI Python SDK covers DALL-E 2

More comprehensive demos are available on

- LLM Scenarios, Use cases on the Gradio App

- Also, source code on GitHub

Further references

- Introducing ChatGPT on Azure OpenAI Service

- Getting Started with Azure AI Studio’s Prompt Flow

- Microsoft-Responsible AI Support for Image and Text Models

- Microsoft Responsible AI Toolbox

- Responsible AI Dashboard blog

- Take a Tour: Responsible AI Toolbox

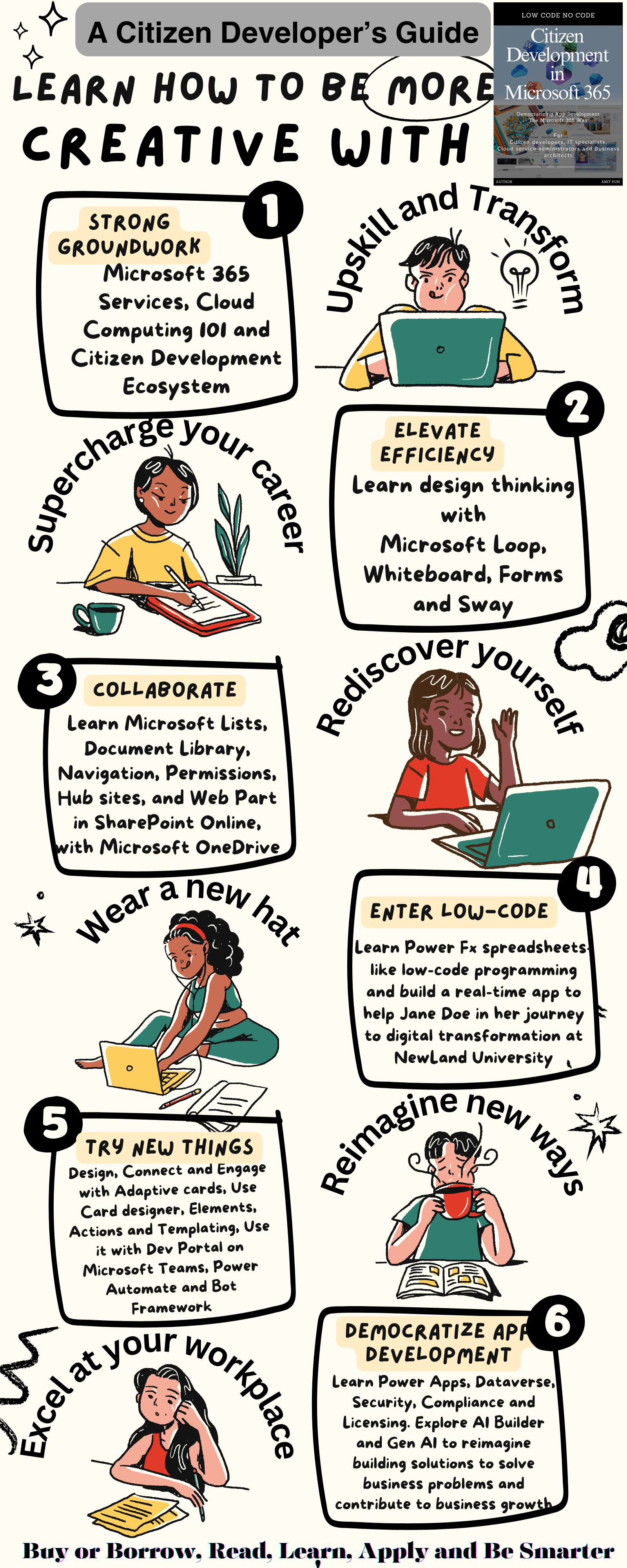

- Azure OpenAI with Power Platform: Unleashing Citizen Developers’ Potential

- Introducing Semantic Kernel: Building AI-Based Apps

- Semantic Kernel: What It Is and Why It Matters

- Chat + Your Data + Plugins

- Azure OpenAI: Generative AI Models and How to Use Them

- Scale generative AI with new Azure AI infrastructure advancements and availability

- Career Essentials in Generative AI by Microsoft and LinkedIn

- CoDi: Any-to-Any Generation via Composable Diffusion

- Orca: Progressive Learning from Complex Explanation Traces of GPT-4

- SpeechX

- SpeechX: Neural Codec Language Model as a Versatile Speech Transformer

- Azure AI Language Studio Summarize information tryout

- Welcoming the generative AI era with Microsoft Azure

- Intelligent Apps Generative AI capabilities with Azure Kubernetes Service (AKS)

- Dynamic Sqlite queries with OpenAI chat functions

- OpenAI tokens and limits

- Building your own DB Copilot for Azure SQL with Azure OpenAI GPT-4-

- Prompt engineering tips

- Microsoft’s Satya Nadella is winning Big Tech’s AI war. Here’s how

- Generative AI and prompting 101

- Generative AI for Beginners

- Azure AI samples Hub - a curated awesome list

- An Introduction to LLMOps: Operationalizing and Managing Large Language Models using Azure ML

- Building for the future: The enterprise generative AI application lifecycle with Azure AI

Prompt Engineering

- Prompt Engineering Guide

- Microsoft Learn - Prompt engineering techniques

- ReAct: Synergizing Reasoning and Acting in Language Models

- LLMs that Reason and Act

- GitHub - A developer’s guide to prompt engineering and LLMs

- Tips for Taking Advantage of Open Large Language Models

OpenAI

- GPT-2 Output Detector https://openai-openai-detector.hf.space

- AI Content Detector - writer.com https://writer.com/ai-content-detector

- AI DETECTOR - content at scale https://contentatscale.ai/ai-content-detector

- GPT-3 Demo - Use cases https://gpt3demo.com

- GPTZero https://gptzero.me

- Tokenizer https://platform.openai.com/tokenizer

- Playground https://platform.openai.com/playground

- DALL-E https://labs.openai.com

- OpenAI ChatGPT & GPT-3 API pricing calculator https://gptforwork.com/tools/openai-chatgpt-api-pricing-calculator

- OpenAI Pricing & Tokens Calculator https://www.gptcostcalculator.com

- Codex JavaScript Sandbox https://platform.openai.com/codex-javascript-sandbox

- OpenAI Cookbook https://github.com/openai/openai-cookbook

- Azure Open AI (demos, documentation, accelerators) by Serge Retkowsky https://github.com/retkowsky/Azure-OpenAI-demos

- To Fine Tune or Not Fine Tune? That is the question

- How vector search and semantic ranking improve your GPT prompts

Comments